Floating point numbers are usually represented in the form

x = ± m × re

where

m is the mantissa

e is the exponent

r is the base

usually 1 > m ≥ r-1

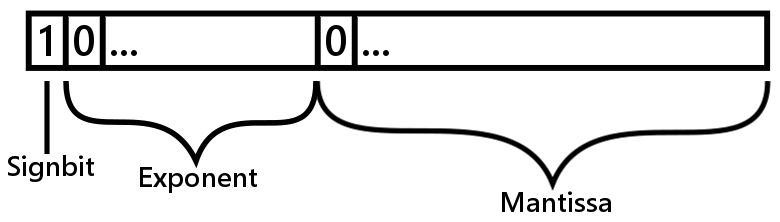

Physically it looks like this:

According to the IEEE 754 standard a single precision floating point number is 4 bytes. It has one sign bit. The exponent is 8 bits and the mantissa is 23 bits.

A double precision number is 8 bytes long. It still has one sign bit but the exponent is 11 bits and the mantissa is 52 bits long.

If x and y are floating point numbers it's usually no good to test in code whether x and y are equal like this:

{

i = 123;

}

{

i = 123;

}

Prerequisites: The remainder when performing integer division between two numbers is called the modulus of these two numbers. This is denoted as x % y in C# or Java. I will denote integer division using the / symbol.

Thus: 3 / 2 = 1;

9 % 5 = 4;

Converting a decimal number into an IEEE 754 floating point number can be accomplished using the algorithm described below:

You start with the integer part of 754.7, which is 754.

- 754 % 2 = 0

- 754 / 2 = 377. 377 % 2 = 1

- 377 / 2 = 188. 188 % 2 = 0

- 188 / 2 = 94. 94 % 2 = 0

- 94 / 2 = 47. 47 % 2 = 1

- 47 / 2 = 23. 23 % 2 = 1

- 23 / 2 = 11. 11 % 2 = 1

- 11 / 2 = 5. 5 % 2 = 1

- 5 / 2 = 2. 2 % 2 = 0

- 2 / 2 = 1. 1 % 2 = 1

The decimal numeral system works like this:

754 = 7 * 102 + 5 * 101 + 4 * 10 0

The binary numeral system works exactly the same way. But instead of using powers of ten you now use powers of two like this:

Let's see if we were right! Is 1011110010 = 754?

=

1 * 512 + 0 * 256 + 1 * 128 + 1 * 64 + 1 * 32 + 1 * 16 + 0 * 8 + 0 * 4 + 1 * 2 + 0 * 1

= 754

Now for the decimals:

The fractional part of 754.7 is 0.7.

- 0.7 * 2 = 1.4 keep the digit in front of the decimal place, which is 1

- Now discard the 1 in 1.4. All leftmost numbers have to be less than 1

- 0.4 * 2 = 0.8 keep the 0

- 0.8 * 2 = 1.6 keep the 1

- 0.6 * 2 = 1.2 keep the 1

- 0.2 * 2 = 0.4 keep the 0

- 0.4 * 2 = 0.8 keep the 0

- 0.8 * 2 = 1.6 keep the 1

- 0.6 * 2 = 1.2 keep the 1

- 0.2 * 2 = 0.4 keep the 0

- 0.4 * 2 = 0.8 keep the 0

- 0.8 * 2 = 1.6 keep the 1

1011110010.101100110011001100110011001100110011...

Transform this into scientific notation. The decimal point has to be shifted 9 steps to the left. You get 1.011110010101100110011001100110011001100110011... × 29.

To be able to use both negative and positive exponents IEEE 754 has specified a bias for the exponent. This bias is 127. Add to 127 the number of times we shifted the decimal point to the left in the step above. 127 + 9 = 136. If we would have shifted it to the right it would have been 127 - 9 = 118. Now convert 136 into a binary number:

- 136 % 2 = 0

- 136 / 2 = 68. 68 % 2 = 0

- 68 / 2 = 34. 34 % 2 = 0

- 34 / 2 = 17. 17 % 2 = 1

- 17 / 2 = 8. 8 % 2 = 0

- 8 / 2 = 4. 4 % 2 = 0

- 4 / 2 = 2. 2 % 2 = 0

- 2 / 2 = 1. 1 % 2 = 1

Our mantissa is the first 23 bits of the digits after the decimal place in our scientific notation of 754.7, which was 1.011110010101100110011001100110011001100110011...

So it's

011110010101100110011001100110011001100110011...

Using this to compile the IEEE 754 representation of 754.7 gives us:

Mantissa: 01111001010110011001100

Most floating point systems uses rounding instead of chopping. If you preferr chopping, the mantissa 01111001010110011001100 would be correct. But IEEE 754 uses rounding. The part of the mantissa which was cut off started 110011001100110011001100...

The first digit is the only one to be taken into account. Just like when you round 1.7 to 2.0, 1-4 is rounded downwards and 5-9 is rounded upwards. In the binary case 01 is rounded upwards to 10 and 00 is rounded downwards to, well, 00.

So the

is rounded to: 01111001010110011001101

Putting the sign bit, exponent and mantissa together it becomes 0 10001000 01111001010110011001101

or

DONE!